A|B Test Integration

The Problem

Team

Designer: Myself

Product Manager

Lead engineer

Salesforce Commerce Cloud

Tools to empower brands and retailers in orchestrating a personalized and seamless shopper experience across all touch points (online, in store, with call centers, at airport kiosks, etc).

Our metrics had been showing that customers not only were very minimally using our A|B test tools but some were very vocal about the fact that it was getting in the way of optimizing their storefronts. Among other things they wanted more support for 3rd party tools. Should we in fact fully delegate this to partners?

Roadmap Context

This item was part of our "discovery" track, dedicated to larger investment items requiring more research and validation. We wanted to confirm that it should (or not) become part of the delivery roadmap.

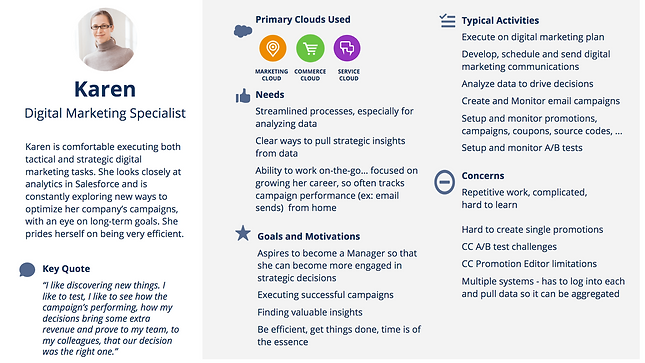

Core Persona

The Marketing persona we were targeting was a result of the former Demandware "marketer" merged with Salesforce existing Marketing Specialist responsible for setting up campaigns with Marketing Cloud. This updated persona was in fact involved with 3 clouds including Service Cloud for customer service and call centers.

KPIs

From a business perspective we looks at indicators related to our A/B test issues:

- bugs logged by tech support

- red accounts*

- AOV (Average Order Value) at risk as a whole

"red" accounts refer to accounts where the customer is at risk of not renewing their contract.

Quotes

These are statements that stood out in the interviews I conducted with 7 brands using our platform.

They illustrated core issues encountered. We also looked at the count of red accounts and the total amount of customers using A/B Test tools (over 100).

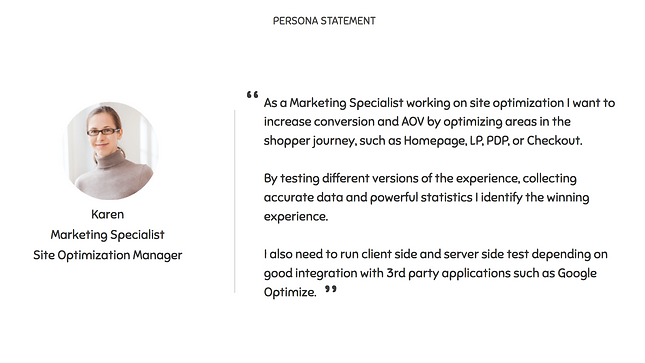

Persona Journey

This was also part of an overarching effort I was leading to breakdown and understand the full Marketer's Journey, in particular regarding Site Optimization.

Persona Discovery

With the Product Manager we further explored pain points our customers were reporting through interviews I conducted. We had tools for them but they didn't meet their current needs.

Understanding their context meant asking: how were they using existing tools for A|B testing? How would our full Saas platform fit into their process without excluding 3rd party tools they used?

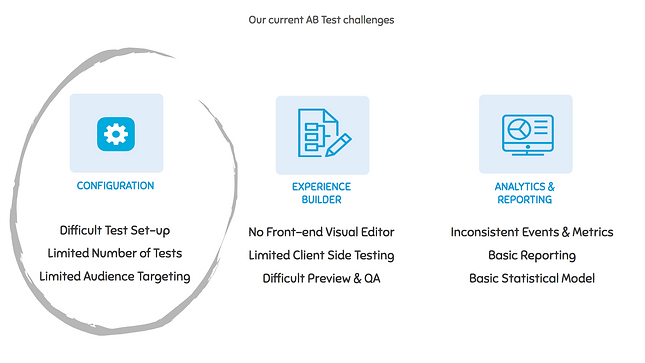

Next: Prioritizing

Research uncovered issues around 3 majors areas.

We chose to focus on the 1st one, the A/B test setup. 3rd party tools offered our customers good in browser "experience builders" on the Client side to edit layout, buttons, etc. They also presented results using advanced visualization (analytics and charts) which we could feed data to.

hand icon Phan Thanh Loc – The Noun Project

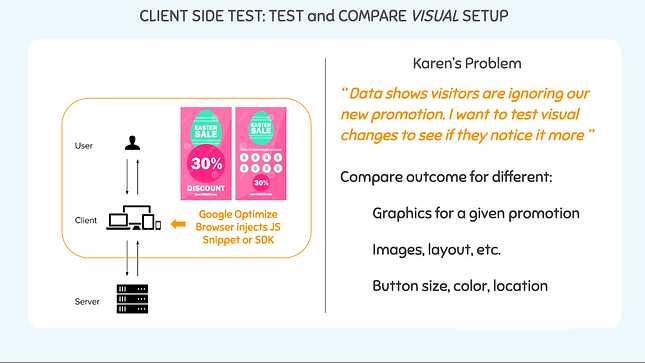

Client side A/B Test

Looking at the setup it was clear that there two "flavors" of A/B testing and the Client side version is where 3rd party tools like Optimize do best.

They enable easy in browser changes to compare various page layouts for instance in this case different graphic styles to show a 30% discount.

Server Side A/B Test

It became clear that Server side testing is where Commerce Cloud could shine.

It supports access to the kind of data typical browser based A/B tools can't access:

- Promotion rule variants: e.g. Buy One Get One 10% off or 10% off on Easter items.

- Sorting rule variants on your storefront to display your merchandise in different orders.

Final Flow

The new setup user flow was well received and supports a combination of Server and Client side (via 3rd party) A|B testing.

Engineering tested data integration with common browser based A|B test tools our customers used to optimize their storefronts. Those tools could receive the test data and visualize it.

Adoption pace depends on speed of integration with those tools.

(See screenshots of high fidelity screens delivered here. Created using Salesforce Lightning Design System – SLDS)

The Commerce Cloud / Demandware platform has offered built-in A/B Testing but with limited analytics and data visualization.

Specialized A/B Testing tools like Optimize have more emphasis on analyzing and visualizing the results (charts, frequency, patterns, etc).

Configuring a test is independent from the actual analysis of the data generated while the test is running.

It involves setting up assets used for each version of your site, defining audiences you want to reach, picking and choosing any variable you want to evaluate (e.g. discount amount of a product category).